WorldTaxAI – Chatbot fiscal (Espagne) avec RAG & recherche hybride

WorldTaxAI

8 weeks

6 600 euros

““WorldTaxAI lets us query tens of thousands of Spanish tax documents with reliable, cited answers. Concept search works very well, and the team is responsive to adjustments.” — WorldTax Team”

— WorldTaxAI

Project summary

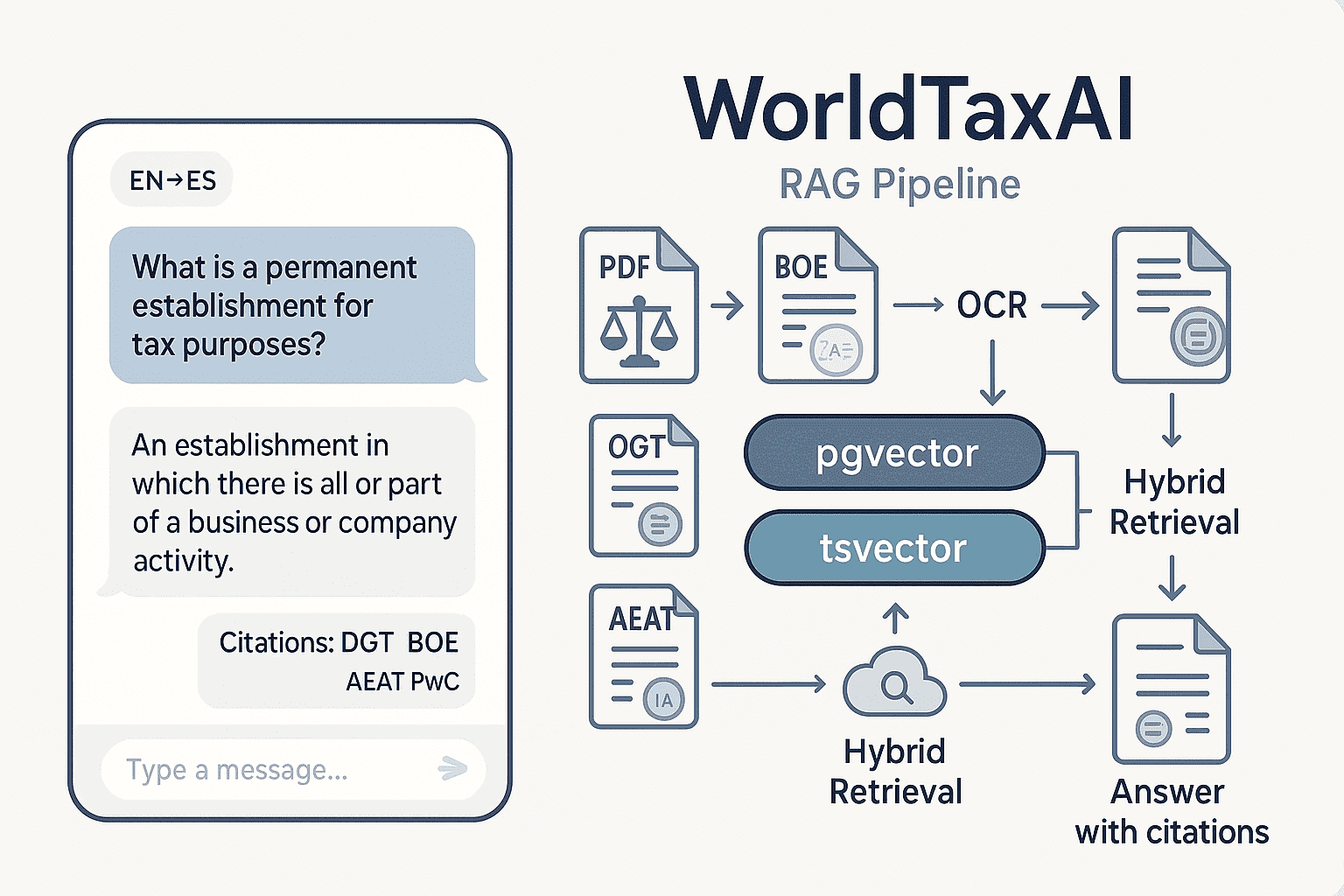

WorldTaxAI is a multilingual (EN↔ES) tax chatbot dedicated to Spanish tax law and administration. It uses a robust RAG that combines semantic search (pgvector) with lexical search (tsvector) to deliver fast, reliable, cited answers.

- Corpus: ~60,000 documents (BOE, DGT, AEAT, PwC), unified from heterogeneous PDFs.

- Quality: OCR, cleaning, Unicode normalization, 400–600 token chunking.

- Indexing: PostgreSQL + pgvector (HNSW) + FTS tsvector (GIN).

- App: FastAPI backend, React/Next front-end (streaming), chat history & deletion.

- Multilingual: questions in English, cited results from an ES-heavy corpus.

Objectives

- Fast access to regulations, rulings, notices, commentary.

- Reliable, cited answers (passages + page/section deep-links).

- Multilingual EN↔ES (terminology robust).

- Cost control (low-cost embeddings, unified Postgres infra).

- Chat features: history, resume, delete, export.

Why hybrid search?

- Semantic (embeddings): captures conceptual proximity; robust to multilingual queries (EN↔ES) and paraphrases.

- Lexical (tsvector): anchors exact references (e.g., modelo 190, art. 10, acronyms).

- Score fusion (+ domain boosts, MMR): maximizes recall & precision without hard filters, aligned to legal intent.

Architecture & pipeline

1) Ingestion & OCR

- Text extraction from born-digital PDFs & scans (OCR).

- Language detection, Unicode normalization, removal of headers/footers, page numbers.

2) Structuring & chunking

- 400–600 token segments with section path and page numbers.

- Quality checks, deduplication, timestamps.

3) Document enrichment

- Metadata: jurisdiction, document_type, doc_title, source_name, year.

- Topics: primary_topic (closed list), secondary_topics (3–5 controlled tags).

- Short abstract for previews + optional doc-level embedding.

4) Embeddings & index

- Model: text-embedding-3-small (OpenAI).

- Storage: PostgreSQL + pgvector vector(1536) + HNSW index.

- Parallel lexical search via tsvector + GIN index.

5) Hybrid retrieval & ranking

- Vector EN↔ES + exact-match lexical.

- Normalized score fusion, domain boosts by topics/type, MMR for diversity, light re-rank.

- Select 5–8 passages with citations (title, section, page).

6) API & Front-end

- FastAPI: auth, sessions, pagination, purge.

- React/Next (streaming): i18n, copy cited snippets, export conversations.

- Speech-to-text endpoint (optional).

7) Ops & security

- CI/CD, metrics (latency, tokens, Recall@k), budgets.

- Privacy: API data not used to train ChatGPT; optional zero retention.

- RLS/read-only permissions, minimal logs, per-segment traceability.

User experience

- Per-user history: resume, rename, delete.

- Clickable citations to the source (with context).

- Fact-based answers: the model does not invent, it grounds in retrieved snippets.

- Multilingual: ask in EN, results in ES, optional EN summary.

- Streaming answers; voice input (optional).

Results & impact

- Seconds to access previously scattered content.

- High recall on technical queries (retención dividendos — modelo 190, establecimiento permanente, etc.).

- Significant time savings for analysts & attorneys.

- Ready foundation for monitoring & analytics (trends, clustering, dedup detection).

Tech stack

- Backend: Python, FastAPI, SQLAlchemy.

- Database: PostgreSQL, pgvector, tsvector, Docker.

- LLM & embeddings: OpenAI (chat gpt-4o-mini, embeddings text-embedding-3-small).

- Front-end: React/Next, streaming, i18n.

- Ops: CI/CD, monitoring, budgets.

Timeline & budget

- Phase 1 — scraping & preparation: delivered, ~2–3 weeks.

- Phase 2 — RAG + API + front + deployment: ~4 weeks.

- Phase 2 quote: €6,600 excl. VAT (21 days @ €300/day).

- Recurring API costs: low (one-shot indexing, lightweight queries).

CTA: Want a POC on your corpus (EN/ES) or an extension to your internal portal? Get a demo.

Client testimonial

“WorldTaxAI lets us query tens of thousands of Spanish tax documents with cited, trustworthy answers. Concept-based search works well, and the team is responsive to adjustments.”

— WorldTax Team

FAQ

Does the chatbot “learn” from our data?

No. API data is not used to train ChatGPT. Embeddings and texts remain private.

Can I ask in English if the corpus is in Spanish?

Yes. Multilingual embeddings + bilingual FTS (language routing).

How do you ensure quality?

Cited answers; continuous evaluation (Recall@k, Precision@k, Faithfulness).

How does it scale?

pgvector handles hundreds of thousands of vectors. HNSW/IVF indexes for speed. Stateless API.

Security & compliance?

Private PostgreSQL, read-only permissions (RLS), history deletion, auditable CI/CD, minimal logs.

Resources & next steps

Sample technical queries

- “Retención sobre dividendos no residentes — modelo 190 (EN→ES)”

- “DGT criteria on digital permanent establishment (EN→ES)”

- “Double tax relief exemption art. 10 — BOE 202x (ES)”

Product extension ideas

- Stronger re-ranking (cross-encoder), query rewriting (expansion).

- Quality dashboard (coverage, freshness, terminology drift).

- Monitoring: incremental ingestion (BOE/DGT/AEAT webhooks), topic alerts.

datamonkeyz

Need the same pipeline?

Reach out, we answer fast.

Replies in under 1 hour

Need this for your team?

We reply fast and can scope a call right away.